Analysis of Free-Tier, Cloud Compute Platforms - Amazon Web Services

In part one, we introduced the use case of a CPU intense workload and baseline data from my MacMini.

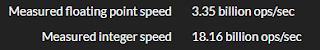

Interesting, the SETI benchmark for Integer and Floating-Point operations fell well below what MacMini.

As you can see, they weren't exactly the same, but relatively consistent.

The Recent Average Credit, which we use to define how effectively these machines are able to process blocks. For three machines with the same processor and consistent benchmarks, I found these results strangely...inconsistent.

From Amazon's documentation, the t2.micro instances operate on credited vCPU time. So even within the confines of a free-tier, additional charges may be applied if the CPU consumption rises above baseline. I don't know how the baseline would be calculated, but based on my billing summary, running a CPU-intensive process for a week does, in fact, exceed that baseline.

So, even though we are in the free tier, I spend $7.23 for a week's worth of computing time. Oops.

t2.micro vs MacMini

With these numbers in mind, how did the AWS instances compare to my MacMini? Basically, awful. The performance benchmarks for Integer Ops/Sec were almost identical, on a core-for-core comparison. The t2.micro instances performed 30% better for Floating-Point Ops/sec as compared to the MacMini.

With numbers in mind, the shocking revelation was how poorly the BOINC/SETI client performed on the t2.micro instances. Over the two weeks of this experiment, the MacMini earned 125% more workload credits than the t2.micro. In other words, for every 5 work units analyzed by the MacMini, only 1 was returned for the t2.micro instances.

The table below shows a side-by-side comparison of the two machine types:

Conclusion

Even with the elasticity and scalability of AWS EC2 instances, these free tier systems aren't exactly the best and brightest as far as crunching SETI numbers are concerned. It would be easy to spin up 100 of these machines, with custom AMIs to perform some parallel processing workloads for CPU intensive processes. But it might be easier, faster, and cheaper to actually pay for the correct instances to do so.

Next, Google Cloud Computing

Since I last checked, Amazon Web Services hosts roughly 70% of all cloud computing resources. For all practical purposes, it IS the cloud; Microsoft, Oracle, and Google all struggling to catch-up. Since my cloud experience is mostly Amazon-related, I decided to start there.

I created three, free-tier EC2 (t2.micro) instances running Amazon Linux. At the time, free-tier provides only 1 vCPU, 1GB of memory and 8GB of storage, with more available if you want to mount your S3 bucket or add EBS storage. Right away, my MacMini has an advantage, since it's a 2 processor machine, and Amazon only gives me one.

But what does the free-tier get you in terms of CPU performance? The answer is not much. The CPUs are Intel Xeon processors, running at 2.40gHz.

Interesting, the SETI benchmark for Integer and Floating-Point operations fell well below what MacMini.

As you can see, they weren't exactly the same, but relatively consistent.

The Recent Average Credit, which we use to define how effectively these machines are able to process blocks. For three machines with the same processor and consistent benchmarks, I found these results strangely...inconsistent.

From Amazon's documentation, the t2.micro instances operate on credited vCPU time. So even within the confines of a free-tier, additional charges may be applied if the CPU consumption rises above baseline. I don't know how the baseline would be calculated, but based on my billing summary, running a CPU-intensive process for a week does, in fact, exceed that baseline.

So, even though we are in the free tier, I spend $7.23 for a week's worth of computing time. Oops.

t2.micro vs MacMini

With these numbers in mind, how did the AWS instances compare to my MacMini? Basically, awful. The performance benchmarks for Integer Ops/Sec were almost identical, on a core-for-core comparison. The t2.micro instances performed 30% better for Floating-Point Ops/sec as compared to the MacMini.

With numbers in mind, the shocking revelation was how poorly the BOINC/SETI client performed on the t2.micro instances. Over the two weeks of this experiment, the MacMini earned 125% more workload credits than the t2.micro. In other words, for every 5 work units analyzed by the MacMini, only 1 was returned for the t2.micro instances.

The table below shows a side-by-side comparison of the two machine types:

Conclusion

Even with the elasticity and scalability of AWS EC2 instances, these free tier systems aren't exactly the best and brightest as far as crunching SETI numbers are concerned. It would be easy to spin up 100 of these machines, with custom AMIs to perform some parallel processing workloads for CPU intensive processes. But it might be easier, faster, and cheaper to actually pay for the correct instances to do so.

Next, Google Cloud Computing

Comments

Post a Comment